Studying abroad in the bustling, often overcast city of London this year, I've found myself surrounded by a familiar sight in both the literal and technological senses: clouds. Much like an enthusiastic weatherman who relishes the diversity of cloud formations, my fascination with technology has deep roots in cloud computing. Inspired by this, I've decided to embark on a four-part series exploring the different aspects of the cloud computing market and potential areas of opportunity.

Just as a 7-day weather forecast guides us through a week of outfit planning, this series will navigate us through the dynamic shifts and developments in cloud computing, offering a clear view of what lies ahead on the digital horizon.

So buckle up and make sure your tray tables are in their upright position because we're about to take off on a cloud-hopping adventure!

The Importance of Cloud Infrastructure

In the world of infrastructure software, the cloud is akin to the cumulonimbus and cirrus that grace our skies: diverse, omnipresent, and foundational to the ecosystem at large. It is responsible for powering applications, storing data, and enabling the seamless flow of information for a variety of industries across the globe. I focus a lot on the infrastructure segment of the enterprise world because of its robust unit economics and a usage-based pricing model that grows with users. These aspects are very aligned with the venture returns model, and I believe my ability to comprehend the flow of information through a tech stack is key to developing unique market insights. Although cloud computing isn't new, the sector remains ripe with opportunities across stages that could redefine existing market segments or create entirely new ones.

Cloud Infrastructure: A Four-Part Series

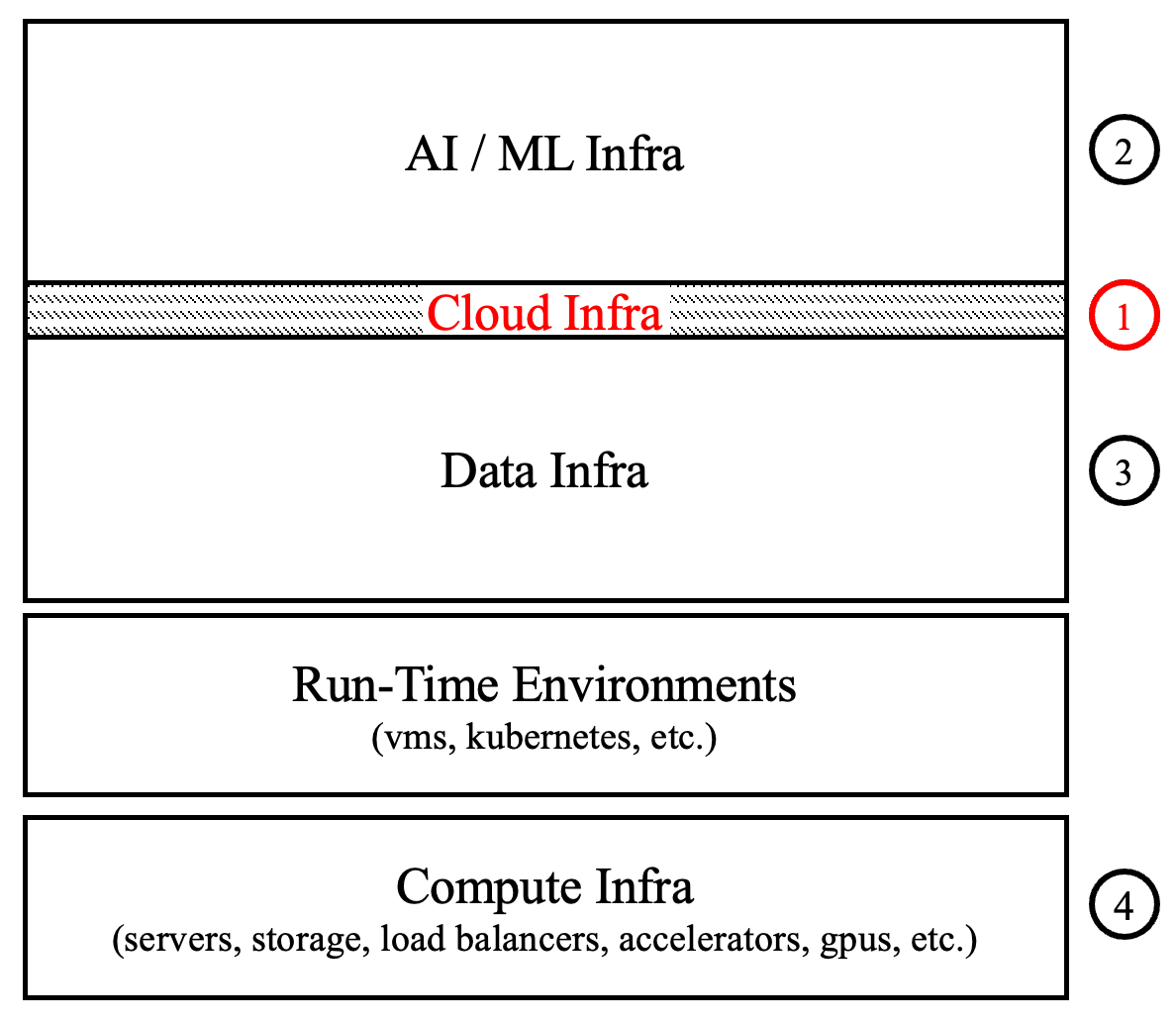

This series will unfold across four distinct segments, as depicted in the diagram below, each highlighting a different facet of cloud computing. The first post will be my longest yet and will cover cloud infrastructure where we'll explore the vast network of virtual services and resources that form the backbone of today's enterprise environment. We'll navigate through the complexities of the modern-day tech stack, delve into why infrastructure is the best area to invest in and set the foundation for subsequent posts. Ascending from this macro overview, we then navigate to AI/ML infrastructure that is reshaping industries with smart capabilities. This segment, an abstraction layer above the cloud, infuses intelligence into the stack. It's where algorithms learn and insights are born, adding a layer of cognitive capability to the services resting on the cloud infrastructure below. Our journey then goes to the heart of the action-—data infrastructure. This layer is where data resides and thrives. It's the crucial scaffold that supports data storage, management, and processing, it interlinks the intelligent AI/ML layer with the raw computational power beneath. Finally, we ground ourselves at the compute infrastructure—the very bottom of the stack. Here, the physical reality of hardware—servers, storage units, load balancers, GPUs, and more—comes into play, underpinning every service and operation in the cloud. As we progress through each post, we'll illustrate how these layers coalesce to create the robust, interconnected tech stack that's driving innovation across industries.

Cloud Infrastructure Defined

Cloud infra refers to the collection of hardware and software components that enable cloud computing.

What Core Problem Does Cloud Solve?

Think back to the days when companies had in-house IT teams that had to not only calculate physical space for servers but also had to deal with a lack of scalability, slow deployment times, and frequent system downtime.

Now, even without specialized infra knowledge, developers can swiftly deploy apps in the cloud, democratizing app development. Not only does this lower OpEx for startups by reducing headcount and hardware costs, but it also allows companies to get to an MVP faster and more efficiently.

To understand the current landscape and future direction of the cloud computing market let’s take out the history books and trace the pivotal moments and innovations that have shaped its evolution.

From Foundations to the Future

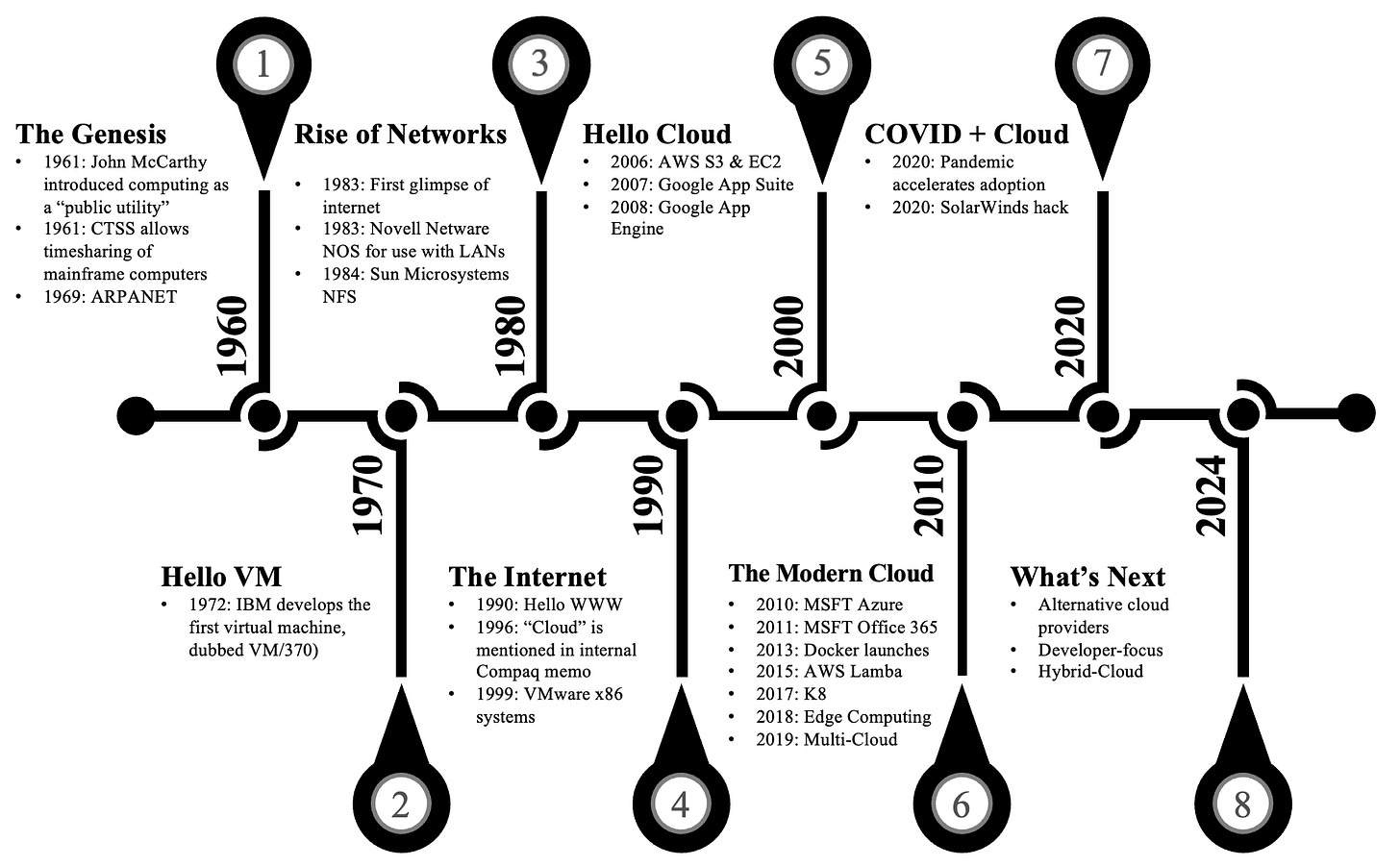

The journey of cloud computing is a remarkable tale of innovation and transformation that has reshaped the landscape of technology and business over the past six decades. This saga began in the early days of computing, where the seeds of cloud computing were planted in the concepts of time-sharing and networked computing. If you'd prefer to save some time and skip this section jump to the TL;DR section for the essential takeaways.

The Genesis (1960s):

In the 1960s, the dawn of cloud computing was heralded by pioneering ideas around computing as a utility. Visionaries such as John McCarthy envisioned a future where computing resources, much like water or electricity, could be shared among multiple users over a network. This period also saw technological breakthroughs like the development of the Compatible Time-Sharing System (CTSS) at MIT. CTSS allowed multiple users to access a single mainframe computer simultaneously. This innovation was crucial for maximizing the utilization of expensive and scarce computing resources, enabling a broader range of applications and users to benefit from advanced computational capabilities. The creation of ARPANET in 1969 was another pivotal development, forming the beginning stages of what would become the Internet. Funded by the Advanced Research Projects Agency (ARPA), ARPANET connected four university computers, facilitating unprecedented levels of data and resource sharing. This network, although initially limited in scope, was the practical inception of the idea of a global network of computers—a fundamental aspect of cloud computing.

Virtualization and Networks (1970s-1980s):

The 1970s brought about a revolution in computing with IBM's introduction of VM/370, which ushered in the era of virtualization technology. This allowed for multiple operating systems to run concurrently on a single physical machine, heralding a new age of efficiency in resource utilization. This concept of virtualization is a cornerstone of modern-day cloud computing which allows for the dynamic allocation of computing resources based on demand.

The 1980s heralded a period of rapid advancement in networking technologies, setting the stage for the interconnected digital world we inhabit today. This era was characterized by the proliferation of personal computers and the inception of the internet, catalyzing a shift towards distributed computing and laying the foundations for what we now call cloud computing. At the heart of this transformation were two companies, notably Novell and Sun Microsystems.

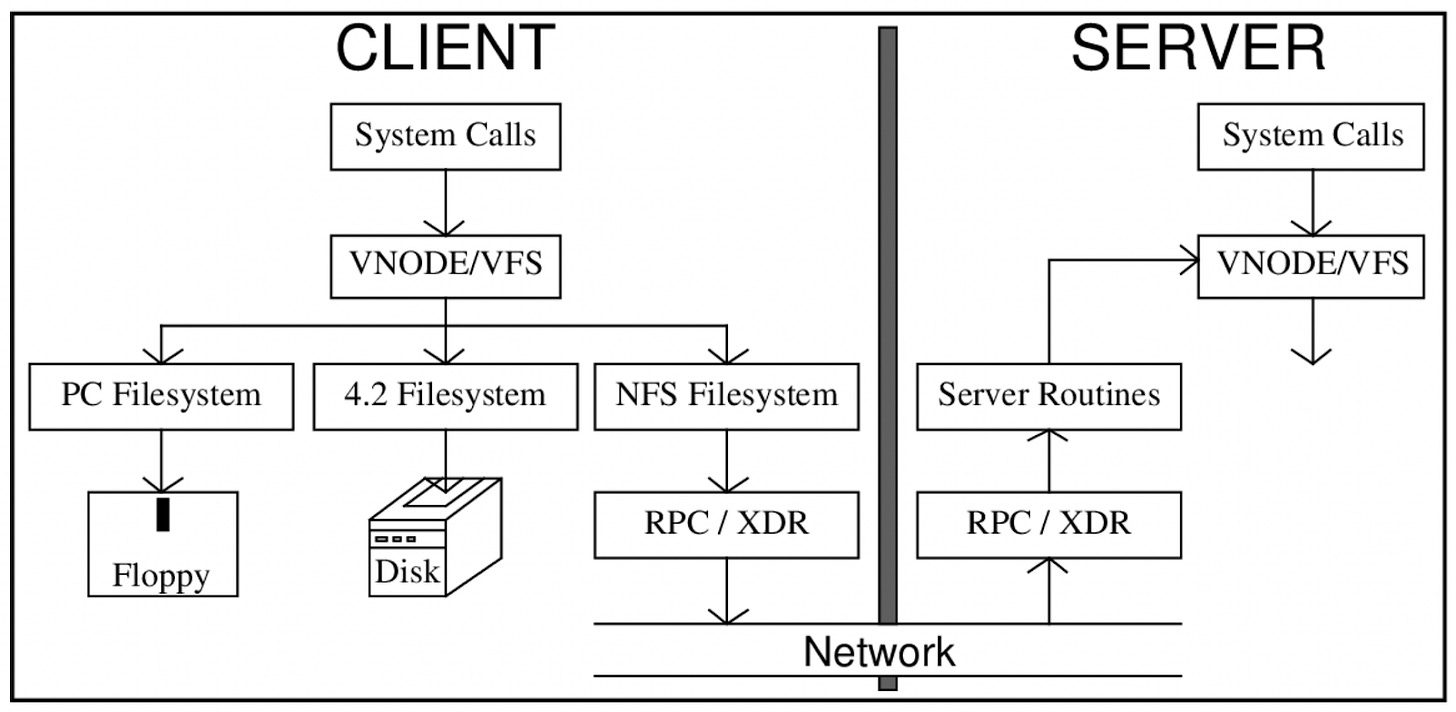

Novell Netware emerged as a revolutionary network operating system (NOS) that facilitated file and printer sharing between personal computers, a feature that was previously limited to larger, more expensive mainframe computers. This democratization of technology allowed small businesses and educational institutions to harness the power of networking, promoting a culture of collaboration and information sharing that was previously unimaginable. Simultaneously, Sun Microsystems' Network File System (NFS) offered a distributed file system that allowed a computer to access files over a network as easily as if they were on its local disk. This technology was critical in the evolution of network computing, enabling the seamless sharing and management of data across different machines and operating systems. NFS's ability to foster a collaborative environment where resources could be efficiently utilized and shared was the V1 for the development of more sophisticated distributed computing models.

These advancements in networking technologies were complemented by the development of protocols such as TCP/IP, which became the standard for Internet communication, further facilitating the interconnectivity of networks around the globe.

Moreover, the 1980s were marked by an increasing awareness of the potential for these technologies to transform business operations, scientific research, and everyday life. As companies and institutions began to understand the value of networking and distributed computing, investment in these technologies grew, driving further innovation and setting the stage for the explosive growth of the Internet in the 1990s.

The Rise of the Internet and Web Services (1990s):

The 1990s can be described as a watershed decade in the history of technology, particularly with the advent of the World Wide Web (WWW), which revolutionized how information was accessed and shared globally. This period marked the beginning of the internet as we know it today, transforming it from a tool used primarily by academics and researchers into a ubiquitous platform that impacted every aspect of society. The introduction of the web browser made the internet accessible to a broad audience, democratizing information and laying the groundwork for the digital age.

In parallel, the term "cloud" began to gain traction within the technology community, symbolizing a pivotal shift towards internet-based computing solutions. This concept of the cloud represented a shift away from traditional computing, where individuals and organizations would manage their hardware and software, to a model where these resources were provided as a service over the internet. This transition was underpinned by the growing realization that computing could be delivered as a utility, much like electricity or water, allowing users to access and pay for computing resources on demand.

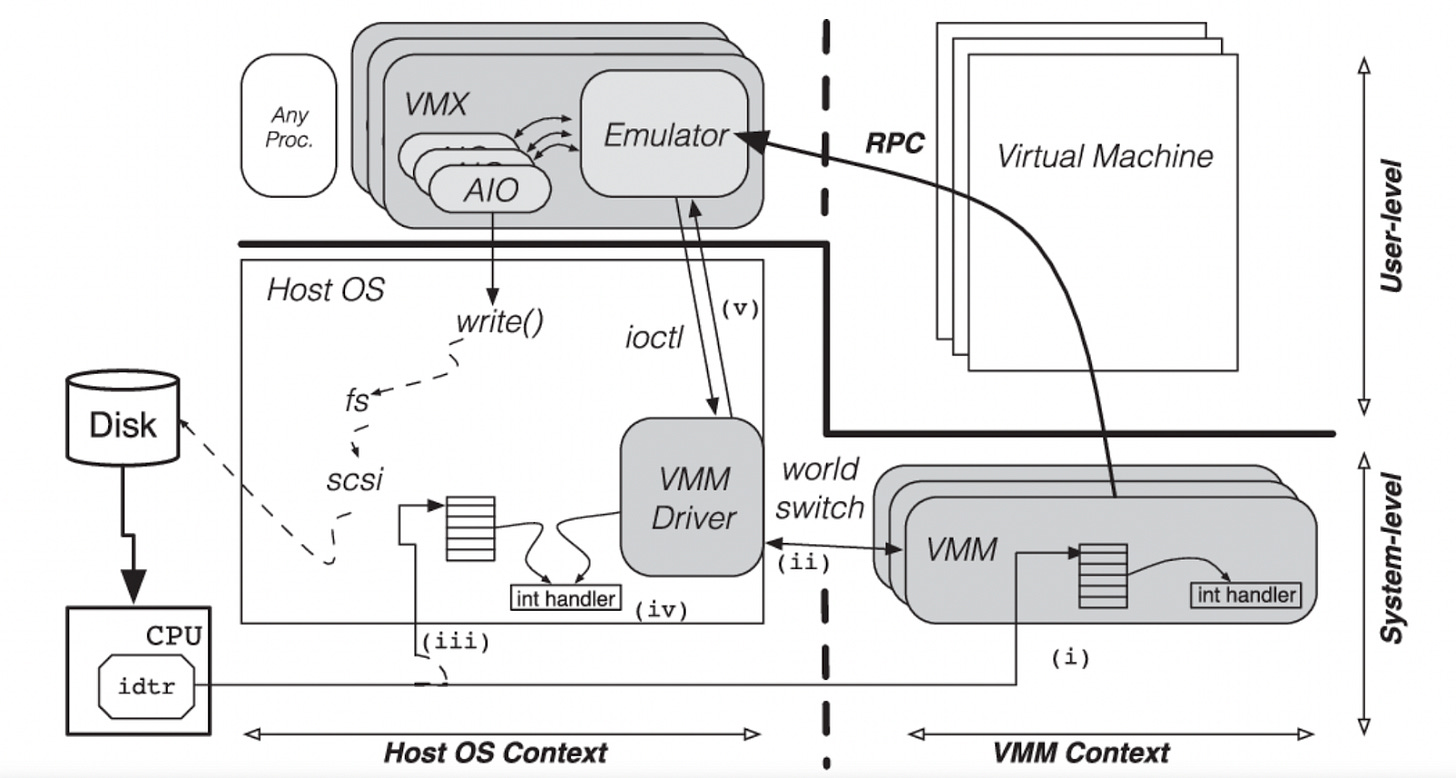

The late 1990s were also notable for a significant technological advancement with VMware's introduction of virtualization for x86 systems. This innovation was crucial, as it allowed for the abstraction of hardware, enabling a single physical machine to run multiple operating systems or applications simultaneously. This technology was a game-changer for the IT industry, as it dramatically improved the efficiency and flexibility of computing resources, reducing costs and enabling more scalable and robust IT infra.

Virtualization technology was a key enabler for the development of cloud services, as it allowed for the pooling of computing resources and the creation of virtual environments that could be allocated dynamically to meet the changing needs of users. This capability was foundational to the development of Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS), and Software-as-a-Service (SaaS) models that define cloud computing today. (these terms will be defined and explored further in a later section)

Cloud Services Emerge (2000s):

The dawn of the 21st century heralded a new era in computing, marked by the emergence of modern cloud services that would fundamentally transform the landscape of information technology. In 2006, Amazon Web Services (AWS) emerged as a pioneering force with the launch of its storage service, Simple Storage Service (S3), and its computing service, Elastic Compute Cloud (EC2). These services were revolutionary, offering scalable and flexible computing resources over the Internet. This move set the stage for the widespread adoption of cloud computing, showcasing a model where businesses could leverage vast computing resources without the need to invest in and maintain expensive physical infra.

Simultaneously, Google made significant strides in popularizing the cloud computing model through the rollout of its suite of web applications. These applications, including Gmail, Google Docs, and Google Drive, provided users with powerful tools that could be accessed from any device with an internet connection, exemplifying the Software-as-a-Service (SaaS) model. Google's suite not only enhanced productivity and collaboration but also introduced the concept of storing and managing data in the cloud, rather than on individual computers or local servers.

These developments were crucial in laying the foundation for what would become the SaaS and Platform-as-a-Service (PaaS) models. SaaS offered software applications as a service over the internet, freeing users from complex software and hardware management. PaaS, on the other hand, provides a platform allowing customers to develop, run, and manage applications without the complexity of building and maintaining the underlying infrastructure. These models played a significant role in democratizing access to computing resources, enabling businesses of all sizes to innovate and scale with unprecedented speed and efficiency.

Expansion and Standardization (2010s):

The 2010s stand out as a period where the concept of the cloud matured from an emerging trend to a fundamental technology. The decade was characterized by significant milestones and innovations that shaped the cloud computing landscape, making it more versatile, robust, and essential to businesses and consumers alike.

One of the hallmark events of this era was the aggressive expansion of cloud services by major technology players, with Microsoft's Azure and Office 365. Microsoft Azure's entry into the cloud market signified a major endorsement of cloud computing from one of the world's leading technology firms, accelerating the adoption of cloud services across various sectors. Azure, alongside Office 365, exemplified the integration of cloud computing with productivity tools, thereby enhancing the accessibility and functionality of cloud services for businesses and individuals worldwide.

Furthermore, the 2010s were notable for the technological advancements in containerization and orchestration, epitomized by Docker and Kubernetes. Docker simplified the packaging, distribution, and management of applications, allowing them to run in isolated environments called containers. This innovation significantly reduced compatibility and deployment issues, making applications more portable and easier to manage. Kubernetes, on the other hand, emerged as the de facto orchestration system for managing containerized applications, providing the tools needed to deploy, scale, and operate application containers across clusters of hosts. These technologies together fostered a more agile, efficient, and scalable approach to application development and deployment.

The decade also witnessed the rise of edge computing, which sought to bring data processing closer to the source of data generation, thereby reducing latency and bandwidth use. This was particularly relevant in the context of the Internet of Things (IoT) and mobile computing, where the speed of data processing and analysis could significantly impact performance and user experience. Edge computing represented a paradigm shift, emphasizing the importance of geographic distribution in cloud computing architectures.

Additionally, the concept of multi-cloud environments gained traction, as organizations sought to optimize their cloud strategies by leveraging the unique strengths of different cloud providers. This approach underscored the growing complexity and sophistication of cloud computing strategies, as businesses aimed to enhance resilience, flexibility, and cost efficiency.

Acceleration in the Digital Era (2020s):

The 2020s commenced with a global event that profoundly reshaped the technology landscape: the COVID-19 pandemic. This crisis catalyzed a swift and unparalleled adoption of cloud services across various sectors, as businesses, educational institutions, and government entities grappled with the sudden need to operate remotely. The pandemic underscored cloud computing's vital role in maintaining continuity and resilience in the face of unprecedented challenges, showcasing its capability to offer scalable, flexible, and efficient computing resources on demand.

During this period, cloud computing emerged not just as a technology enabler but as a critical infra backbone that supported the rapid shift to digital and remote operations. Organizations of all sizes leveraged cloud-based solutions to facilitate remote work, distance learning, and online commerce, thereby ensuring their survival and continued operation in a drastically altered global environment. This shift also highlighted the cloud's role in enabling rapid innovation and agility, allowing organizations to adapt their operations and services to meet changing demands and conditions.

The pandemic further accelerated trends toward digital transformation, pushing companies to fast-track their migration to cloud environments to benefit from the cloud's elasticity, cost-effectiveness, and accessibility. The increased reliance on cloud services for collaboration, communication, and business processes reflected a broader recognition of the cloud's strategic value beyond mere cost savings or operational efficiency.

Moreover, the 2020s have seen an increased focus on cloud security, data sovereignty, and regulatory compliance, as the reliance on cloud services raises important questions about data privacy, protection, and governance. These concerns have prompted cloud providers and users to invest in advanced security measures, develop robust data management policies, and explore hybrid or multi-cloud strategies to mitigate risks and comply with regulatory requirements.

The ongoing evolution of cloud computing technologies, including advancements in artificial intelligence (AI), machine learning (ML), and edge computing, continues to expand the possibilities for innovation and transformation across industries.

TL;DR

If you are one of those students who never read the assigned readings in university and scoured the internet for SparkNote summaries then these are the key takeaways from above.

1960 - 2000: Barriers were high with app development costing millions due to the need for software, hardware, and personnel. Systems were unscalable and unreliable, with companies like Oracle, SAP, and IBM being dominant.

2010: Moving to the cloud involved compatibility issues, data loss, and downtime. Despite having the cloud, benefits like multi-tenancy and scalability were not fully realized. Key players included Azure, Amazon S3, Google Cloud, VMware, and Amazon EC2.

Today: Technologies are now optimized for the cloud, making app creation easier and unlocking the cloud's full potential. This contributes to outstanding business success. Companies like CrowdStrike, MongoDB, GitHub, HashiCorp, Datadog, Confluent, and Snowflake are major players in the ecosystem.

How Has The Stack Evolved?

First-order principles tell us that everything in this world can be broken down into its most fundamental components. For cloud infra, this theme has been:

Rising Complexity

As technology has progressed and businesses have gotten more verticalized the legacy tech stack has continued to unbundle. This represents a paradigm shift from the monolithic to the modular, from all-in-one solutions to specialized, a la carte services. Thus, solutions are now tailored to fit the specific needs of businesses.

I believe this concept can be better understood by illustrating a legacy tech stack that would have appeared in the early 2000s.

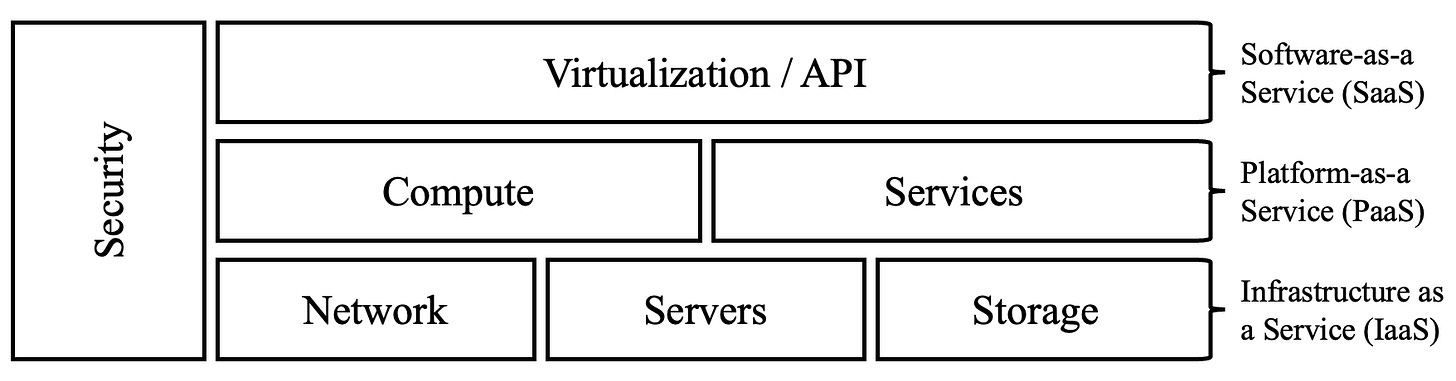

These building blocks offer the chance to understand that legacy solutions often time only have a few solutions within each of these buckets. When referring to the different types of solutions they generally fall into one of the following three categories:

SaaS: Shines with its out-of-the-box functionality, offering applications ready for use, freeing users from the complexities of software maintenance and updates

PaaS: Provides developers the environment to build, deploy, and manage applications without the hassle of maintaining the underlying infrastructure

IaaS: Lays out the virtualized hardware landscape on a pay-as-you-go basis, granting companies the agility to scale with ease, minus the CapEx and maintenance of physical hardware

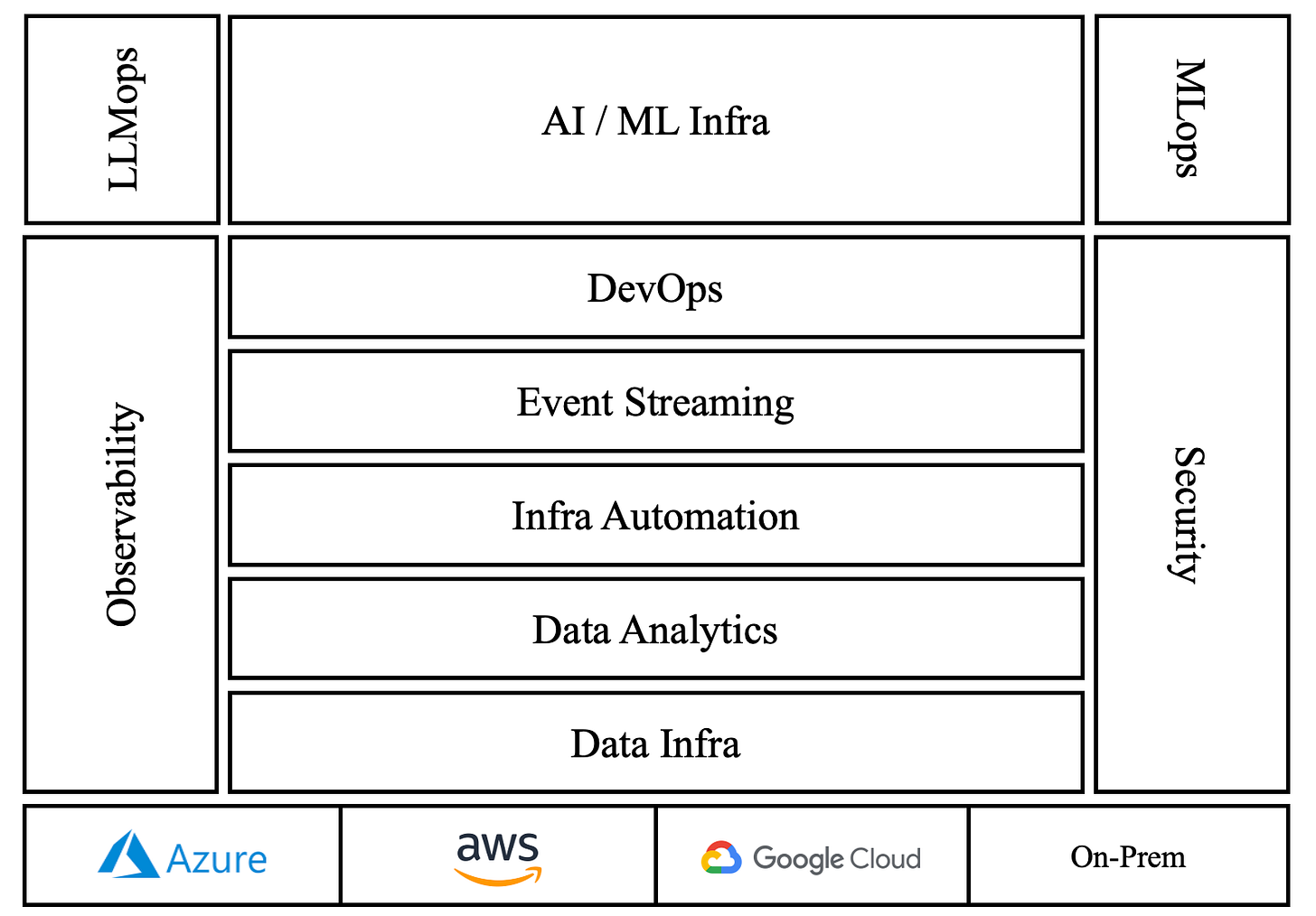

These buckets become a lot more specific and give rise to the next-generation tech stack of cloud infra. I couldn’t find anyone who had put this next-generation tech stack into a simple visual so I created the following graphic and dubbed it the Modern Day Cloud Infra Stack:

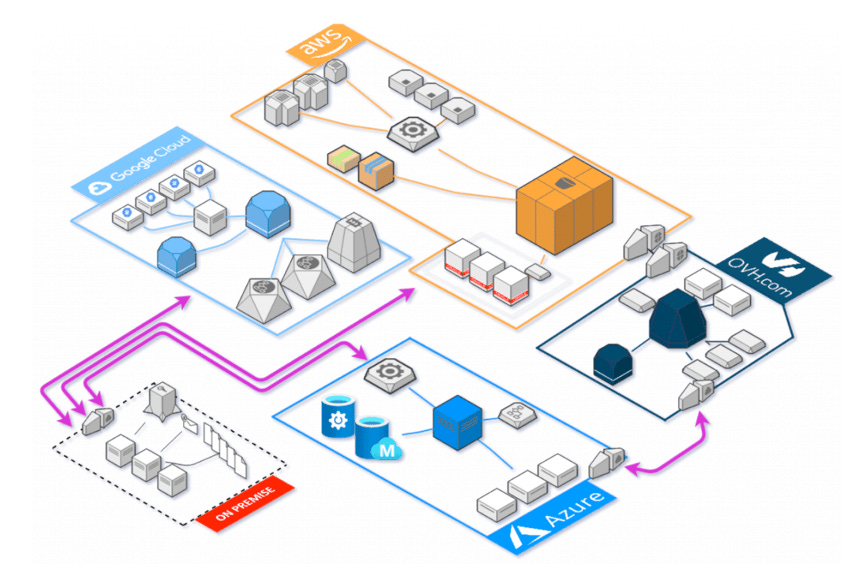

In this modern technology stack, we witness a complex yet harmoniously integrated landscape. Starting with the three major hyperscalers and on-prem servers, we have the four most popular environments for deployment. These can be mixed and matched with what is known as hybrid and multi-cloud strategies. With each hyperscaler having different benefits when compared to each other, it is becoming increasingly common for such strategies to be implemented. Following this foundation, we then get into the information architecture that supports the collection, storage, and management of data, dubbed data infra. This layer forms the terrain upon which all applications and services stand. Directly above, the data analytics layer serves as the interpretative lens, transforming raw data into actionable insights. It is the cognitive process that gives meaning to the myriad of data points. Infrastructure automation comes next, introducing efficiency and precision. Here, repetitive tasks are automated, releasing human intellect for more creative and strategic endeavors. As we continue upwards, event streaming establishes the nervous system of the stack, allowing real-time processing and reaction to events as they occur, ensuring that the enterprise is as responsive as it is intelligent. Then we get to DevOps which acts as the connective tissue, marrying development with operations to enhance collaboration and increase deployment frequency. Stretching across each layer of the stack, observability, and security are pivotal in upholding this digital infra. Observability ensures that the system's health and performance are constantly monitored and evaluated, while security acts as a shield protecting the realm against threats and vulnerabilities. Then we get to the abstraction layer above the stack and the components that make this layer possible. AI/ML Infra is where AI and ML models are nurtured, allowing businesses to push the boundaries of predictive modeling and analytics. Running the length of this layer are the LLMops and MLops layers, which represent specialized operational practices tailored for AI and machine learning. LLMops focuses on the lifecycle management of AI, ensuring that from inception to retirement, every model serves its purpose effectively. MLops, on the other hand, concentrate on the operationalization of machine learning, emphasizing the deployment, monitoring, and maintenance of models in production environments. The goal across this stack is to leverage large amounts of data to draw insights that are secure and more accurate than legacy models. It is important to keep in mind that this is an oversimplified stack and each section has many subsections that allow this ecosystem to be seamlessly integrated.

Cloud Computing Market Dynamics

Infrastructure vs Application Software:

If you have been here before, you know that I am a big fan of infra software on this blog. In my first post titled, transformers: the infrastructure powering the future, I included a snippet on the breakdown of the application vs infra size of the cloud computing market from a public market perspective. Tomasz Tunguz, previously Managing Director at Redpoint and now a board member of Dremio and General Partner at Theory Ventures, did an incredible job breaking down this market. If you look at the three largest cloud providers, being sure only to include the cloud segment of the businesses, the combined market capitalization is in the ballpark of $2.1 Trillion. Now, if you take a step back and look at the 100 largest cloud companies in the application layer you arrive at just about the same value. One of the key reasons for this value accretion is the inherent nature of the business model which is very much aligned with the venture returns model.

Business Model:

When we think about infra software and business model one key concept should come to mind.

Usage-based pricing

When Salesforce pioneered the Software-as-a-Service (SaaS) model in 1999, it captivated many investors with its innovative billing structure. SaaS companies charge customers through subscription models, which gives rise to the compelling advantage of recurring revenue secured by contracts. This approach not only stabilizes revenue forecasts but also drives higher valuations of the company due to the reliability and predictability of its income streams.

The graphic below helps illustrate this concept by introducing popular software companies and their associated Net Dollar Retention (NDR) which measures how much a company’s existing customers expand or contract YoY. On the left side, under "Seat-Based Pricing," we see companies like Adobe (creative), Asana (project management), Figma (product design), HubSpot (CRM), Notion (productivity), and Workday (human capital management). These represent SaaS providers that charge based on the number of user seats or licenses sold.

This is starkly contrasted by the companies operating in the infra segment. Under "Usage-Based Pricing," we see companies such as Confluent (event streaming), CrowdStrike (cyber), HashiCorp (infra automation / cyber), MongoDB (database), Snowflake (database/analytics), and Twilio (communications). These companies employ a pricing model based on the actual usage of their services rather than the number of seats. This is highly appealing because this model is intrinsically tied to the success of the customers, suggesting that pricing aligns with the value delivered. A company like Snowflake benefits from companies expanding their data management and analytics capabilities. As customers grow and their data usage increases, Snowflake's usage-based pricing model allows them to scale their services in tandem, thus directly tying their revenue to the actual value customers derive from their platform.

We will get more into more of the metrics that investors look for but from an NDR perspective, we see a 7% higher NDR for infra SaaS. This further supports the fact that infra services see more customer spending growth year over year compared to application services.

Unit Economics:

We as investors love nothing more than a beautiful spreadsheet. Apart from using Excel as a creative outlet to express what Wall St. Bank trained us, we love to dive into unit economics to see the underlying drivers of a company's financial performance. Delving into the numbers allows us to dissect and understand the cost and revenue contributions of each unit sold or customer-acquired

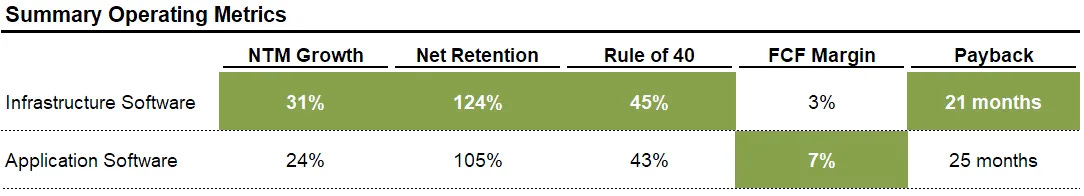

In the table below, we see five common metrics that investors track for software. We see a lot of green in the infra category which I will break down briefly below.

NTM Growth: Infrastructure software shows a next twelve months (NTM) growth rate of 31%, which is higher than the application software’s growth rate of 24%.

Net Retention: Infrastructure software has a net retention rate of 124%, indicating customers are spending 24% more than they did in the previous year. Application software has a lower net retention rate of 105%.

Rule of 40: The “Rule of 40” is a SaaS metric that suggests a company's combined growth rate and profit margin should exceed 40%. Infrastructure software exceeds this benchmark at 45%, while application software is slightly below at 43%.

FCF Margin: Free Cash Flow (FCF) Margin for infrastructure software stands at 3%, suggesting that for every dollar of revenue, 3 cents are converted to free cash flow. Application software, however, has a higher FCF Margin at 7%.

Payback: The payback period, which is the time taken for a company to recoup its investment, is 21 months for infrastructure software and slightly longer for application software at 25 months.

A Private Market Perspective:

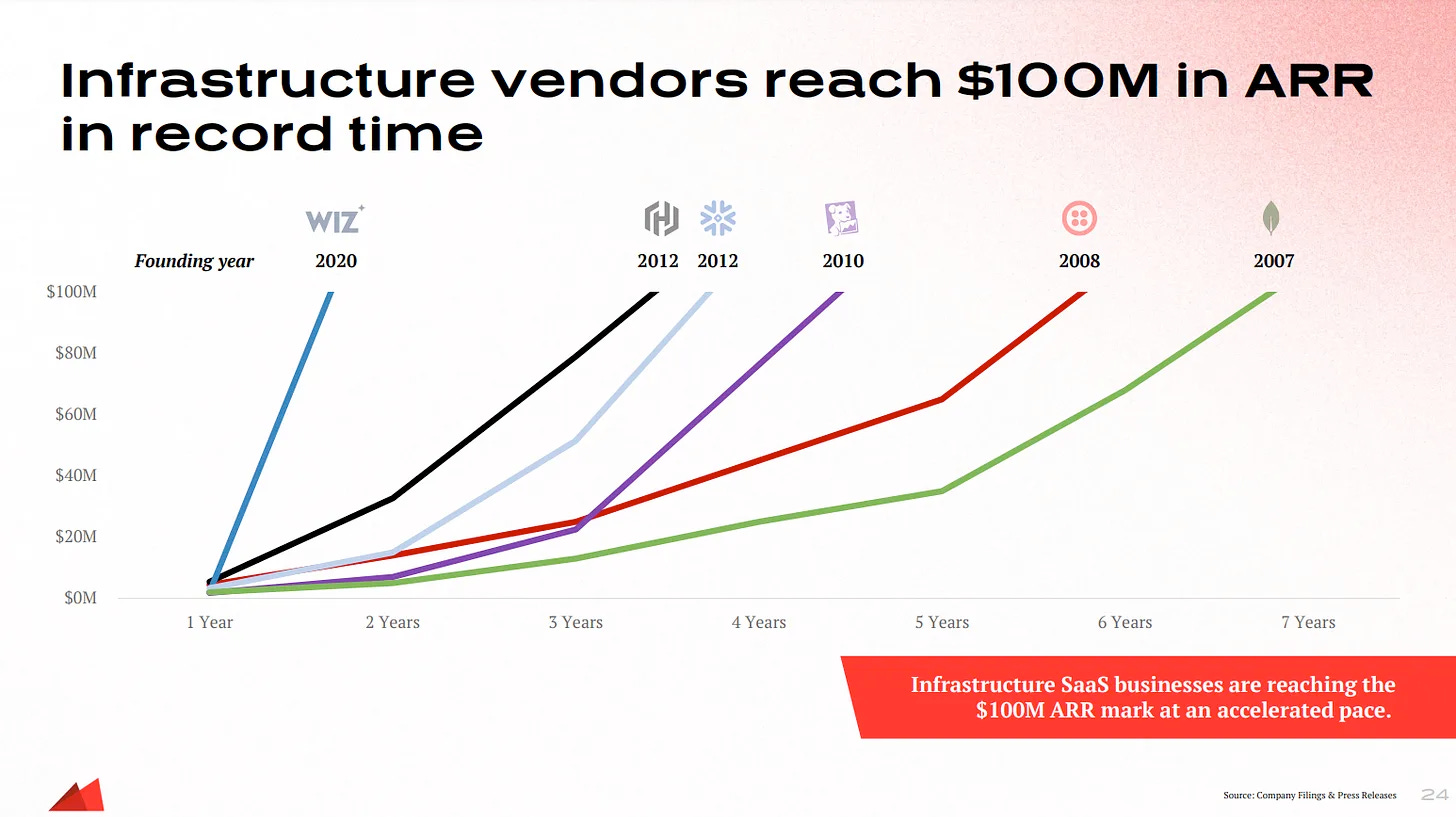

As companies get more efficient and the role of the cloud gets more essential in the enterprise environment, we are seeing companies reaching the $100M threshold for annual recurring revenue (ARR) a lot faster.

The steepest curve belongs to Wiz, a cloud security company (that falls under cloud infra) that was founded in 2020. They reached the $100 million ARR milestone in an astonishing one-year timeframe. Other infra companies, founded between 2007 and 2012, took longer—ranging from three to seven years—to reach the same financial benchmark.

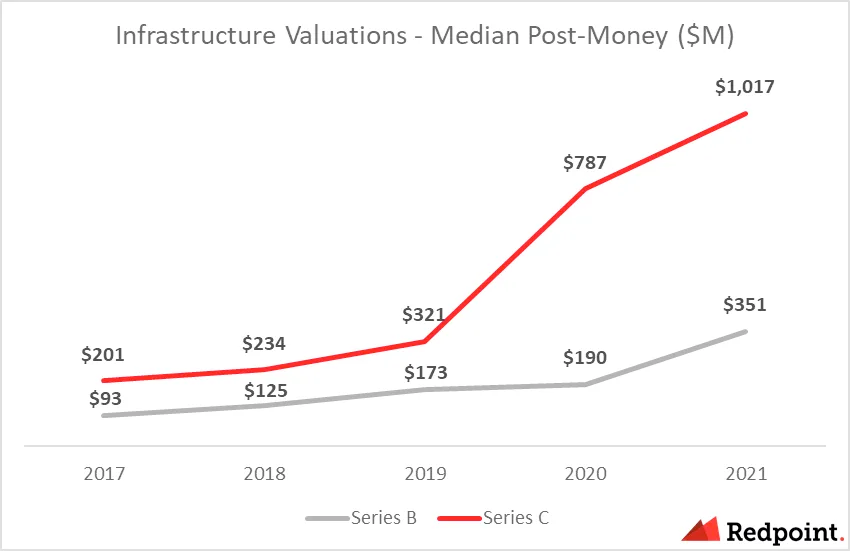

This trend has already been discovered by investors which can be seen by the surging valuations in Series B and Series C rounds. The typical valuation at Series C for an infra company has climbed to approximately $200M. Moreover, in 2021, founders in the infrastructure software space were securing post-money valuations of around $1 billion, marking a substantial fivefold increase. This trend highlights investors' confidence as they commit to more significant outcomes, equipping these companies with capital for several years ahead under highly favorable terms. It's important to note that this data only extends through 2021. As we fast-forward to the present day, I anticipate the continuation of this trend, although the terms will not be as favorable as they once were, with investors increasingly seeking to guard against downside risk.

A Public Market Perspective:

Now, turning our attention to the public markets, we observe a parallel trend. As venture capitalists, we often look to public markets to steer valuations, with the stage of investment influencing the degree of this guidance. Typically, the earlier the investment stage, the greater the delay in aligning private valuations with public market fluctuations.

The numbers speak for themselves, in 2021. Redpoint did a deep-dive and found that AWS, Azure, and Google Cloud achieved $100B in revenue run-rate. Though you would think that these companies are fully matured, businesses like Confluent, Datadog, HashiCorp, and Snowflake continue to show impressive growth—much of which can be attributed to usage-based pricing.

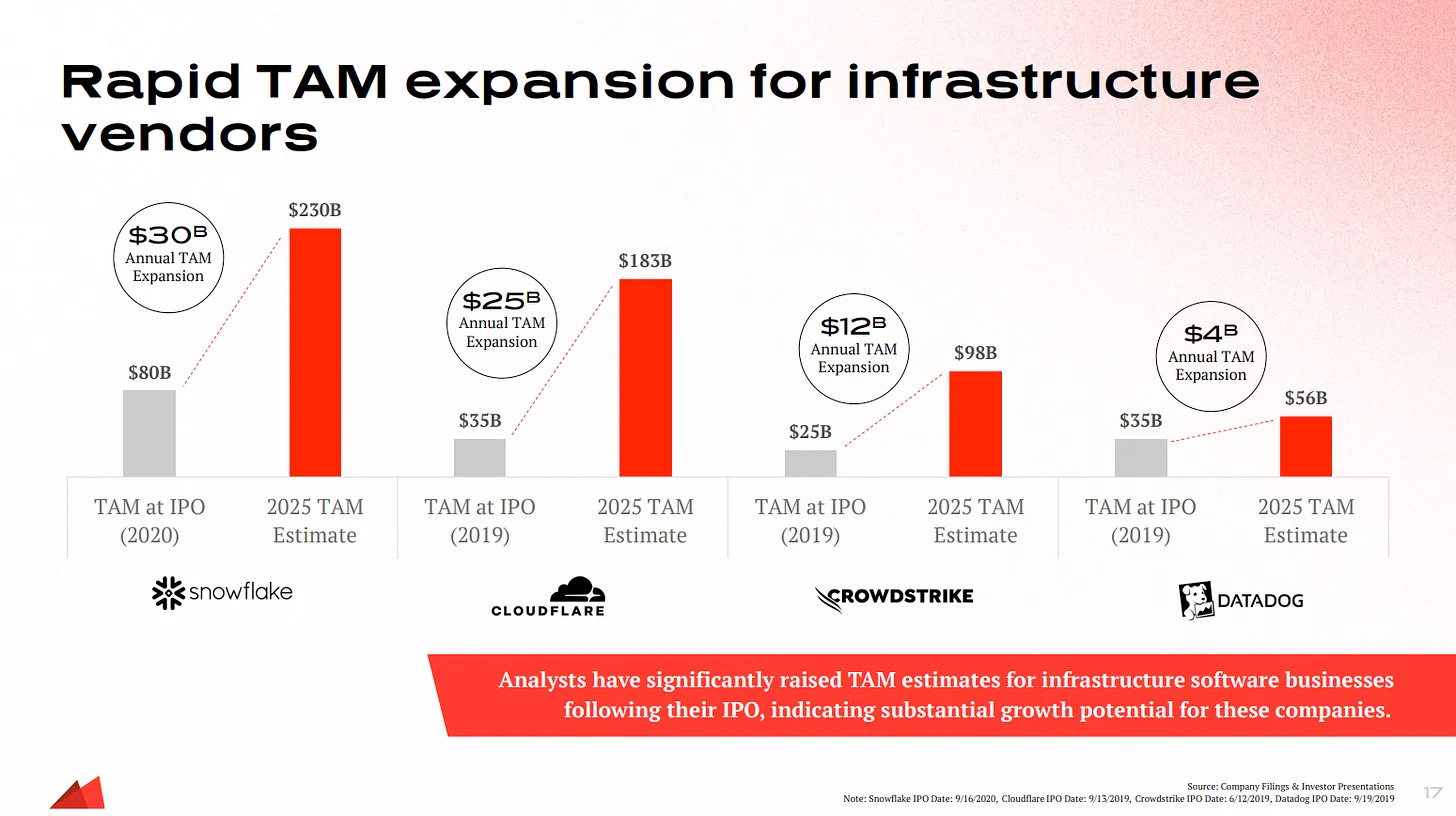

Apart from this, many of the top names in the public cloud continue to increase their overall TAM estimates.

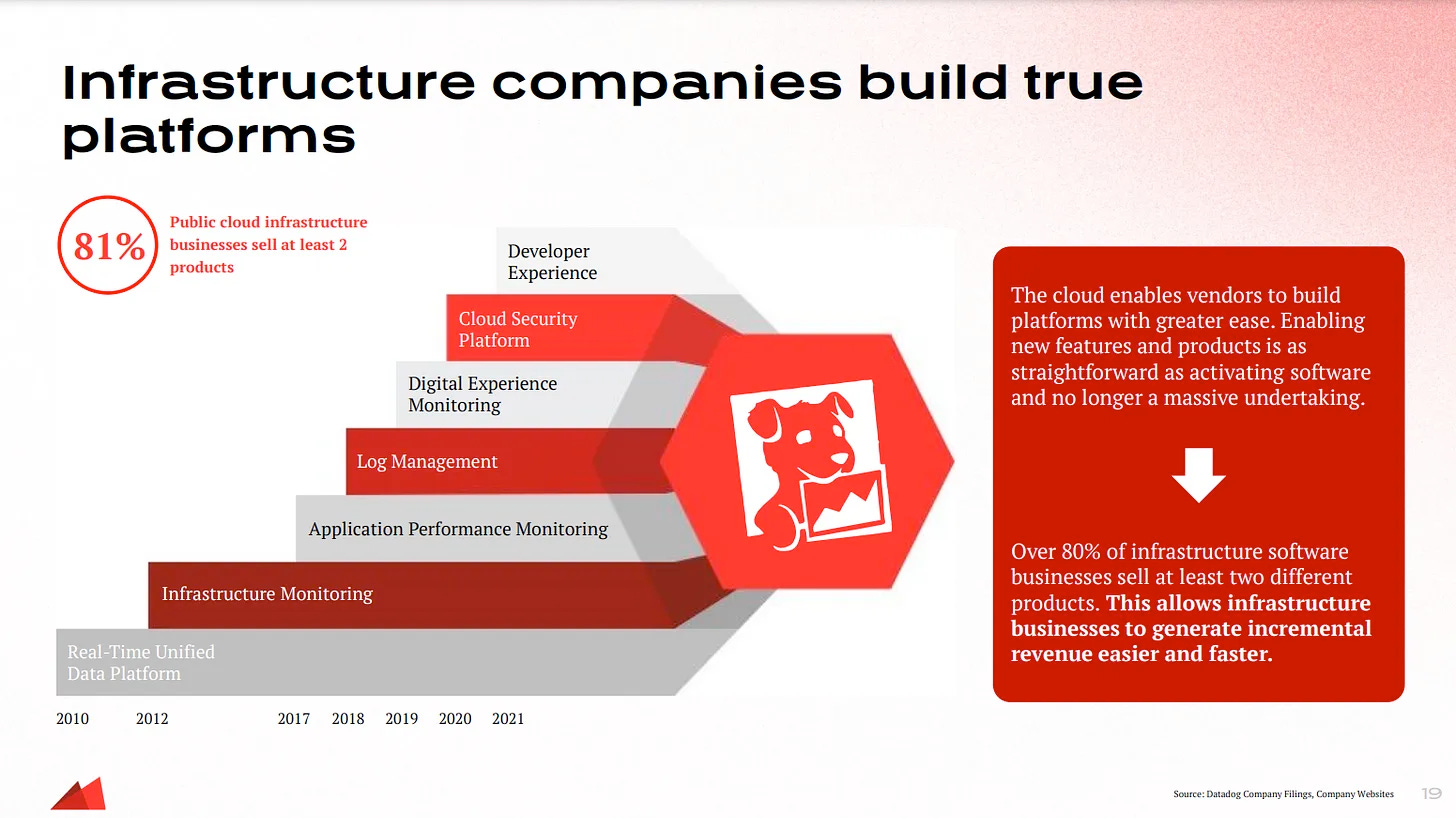

This can be attributed to the increased adoption of cloud across industries as well as the ability for companies to offer multiple product offerings. For us investors, this approach is crucial because incumbent companies are adopting a mindset similar to the Department of Defense (DoD) regarding defense technology, which is “exploit what we have, buy what we can, and build only what we must.” Take Datadog which Redpoint highlighted as the optimal company for this case study. They initially started as an infrastructure monitoring company. Over the years, they’ve added product lines around application performance monitoring, logging, security, and developer experience. And it’s not just Datadog — of the public SaaS universe, over 80% of infrastructure businesses sell at least 2 different products, allowing them to better maximize their TAMs.

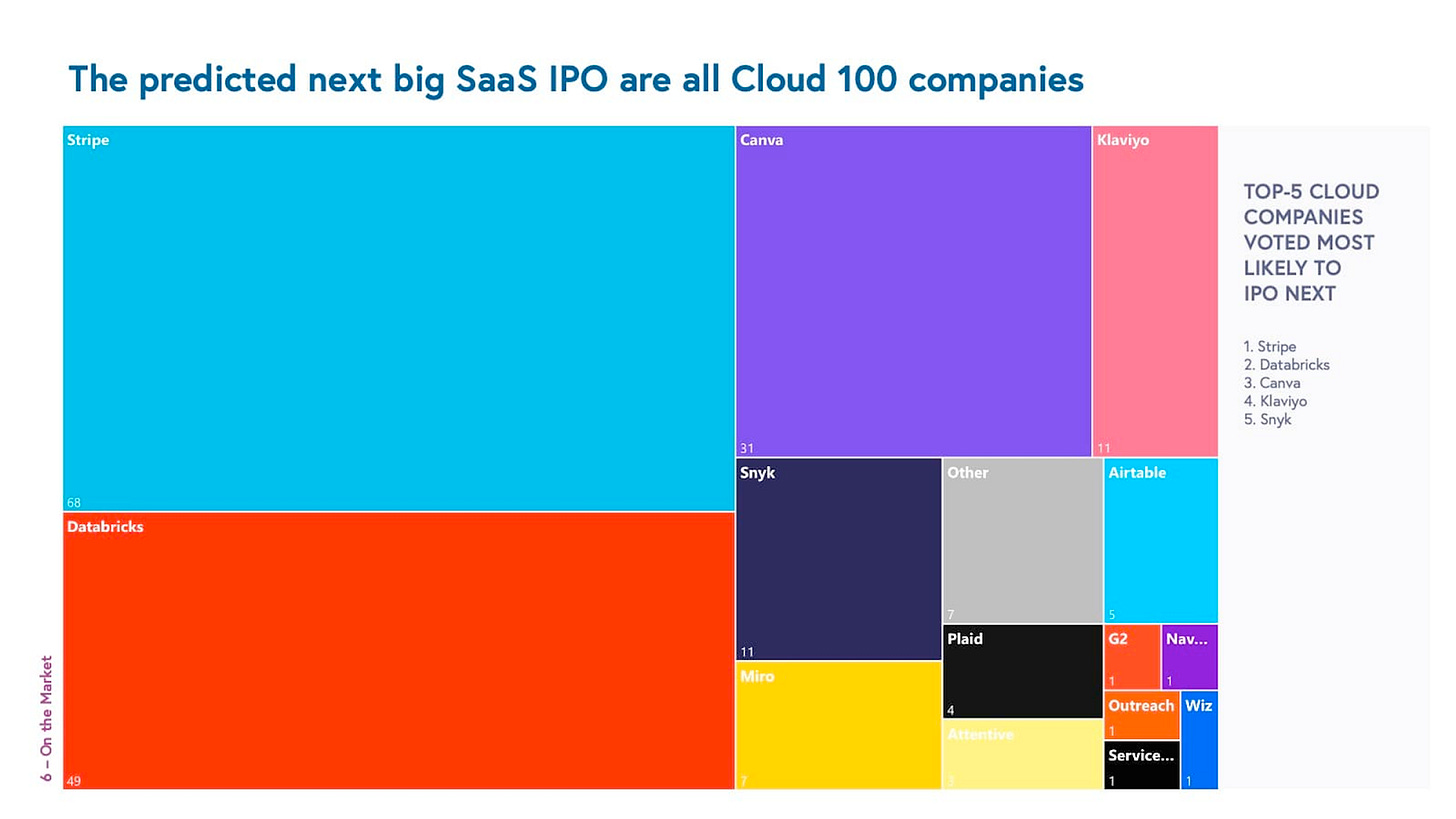

And our final topic covers a topic that many people have thought about in the past two years: IPOs. Bessemer did a poll to see what companies were the most likely to IPO in the coming years. Though they are not all infra companies, much of the backlog is comprised of cloud companies. This underscores the increasing eagerness of public market investors to allocate a part of their portfolios to high-quality software companies poised to become the next market leaders.

The Future Trends Shaping Cloud Computing

So now you hopefully understand the past and present of cloud computing, but we as investors get paid to think about the future. So with this in mind, let me outline 5 key trends I expect to see in this market over the next couple of years.

Vendor Lock-in Challenges: Customers are increasingly wary of becoming too reliant on a single cloud provider due to proprietary technologies, specialized service models, unique APIs, or data storage formats that make switching providers costly and complex. The main factors contributing to this issue include proprietary technology that isn't widely adopted, high data transfer costs, customized services that are tightly integrated with a specific provider's infrastructure, extensive integration and configuration requirements, and contractual or legal barriers to switching providers.

Rise of Hybrid Cloud Solutions: There's a growing trend towards hybrid cloud environments, particularly for AI applications, where on-premises solutions are integrated with cloud services. This approach is gaining traction as it offers flexibility, scalability, and enhanced security by allowing sensitive data to remain on-premises while still leveraging the cloud's computational power. There is also an enormous cost associated with training models in the cloud, which is giving rise to a new type of cloud…this will be covered in the next post, so keep an eye out ;)

Emergence of Alternative Cloud Providers: New players like DigitalOcean, Vercel, and Fly.io are emerging as popular choices among developers seeking simpler cloud solutions that reduce configuration overhead and allow more time to be spent on development. This trend underscores a market shift towards platforms prioritizing ease of use and developer experience.

Simplicity and Performance Balance: There's a noticeable shift towards designing cloud services that offer a balance between simplicity and performance. The industry is moving away from complex configurations and towards more intuitive, easy-to-use services that don’t compromise on power or functionality.

Geographical Distribution Needs: As the global demand for cloud services increases, there's a trend towards developing cloud solutions optimized for different geographical locations to ensure a seamless user experience worldwide. This involves addressing latency issues and regulatory compliance across borders.

Where Would I Write an Angel Check?

As mentioned earlier in the blog, Amazon sparked a revolution in data storage with the introduction of S3 eighteen years ago. However, as we fast-forward to 2024, it's clear that the cloud computing landscape has evolved significantly. The very attributes that once made S3 revolutionary have become limitations in meeting the demands of modern developers. These developers now seek more intuitive, flexible, and globally optimized solutions, a need that has led to a shift towards platforms like DigitalOcean, Vercel, and Fly.io. This transition underscores a growing gap between the outdated practices of traditional clouds and the requirements of today’s development environment.

Enter Tigris

Positioned at the forefront of this evolving landscape, Tigris embodies a vision that marries power with simplicity. Their mission is to liberate developers from the complexities of cloud infrastructure management, enabling them to dedicate their energies to creating innovative applications. Tigris directly addresses today's core challenges, offering solutions optimized for real-time applications and ensuring ease of use and global distribution.

The inception of Tigris is particularly timely, given the increasing inadequacy of traditional cloud giants like AWS, Google, and Azure. While they continue to dominate the market, their designs, rooted in the last decade’s operational practices, are becoming increasingly obsolete. The rise of hosting platforms such as Fly.io, Railway, and even front-end services like Netlify and Vercel has introduced functionalities that align more closely with modern development practices. These include easier multi-region support and a superior developer experience, making them more appealing for today’s developers.

Nevertheless, a significant hurdle remains: storage. The complexity of delivering a robust cloud storage solution has prevented many from fully transitioning to these newer platforms. This is where Tigris shines. Offering a globally available, S3-compatible distributed object-storage service, Tigris simplifies the application development process. It allows for writing and reading data in any region, with capabilities for handling concurrent updates across multiple regions and maintaining a consistent global dataset. Tigris stands as a global multi-master storage platform, eliminating the provisioning headaches and unwieldy interfaces of traditional clouds.

The team behind Tigris, led by Ovais Tariq, brings invaluable expertise from his time managing Uber's global storage and developing FoundationDB, a technology that has supported global object-storage systems at firms like Apple. This experience is pivotal, highlighting Tigris’s capability to revolutionize cloud storage.

One thing that stood out is the fact that the founding team is ex-Uber. This got me thinking about my two favorite cloud infrastructure startups. The first, Chronosphere, is a startup founded by two ex-Uber engineers who helped create the open-source M3 monitoring project. This company plays in the observability sector of the market and has been wildly successful to date, with the most recently conducting a $115 extension on their $200M Series C that puts them at a whopping $1.6B valuation. With investors such as GV, Founders Fund, General Atlantic, Greylock, and Lux Capital this is certainly my number one growth stage company to watch in the coming years.

The other company, Onehouse emerged from stealth in 2022 with a cloud data lake product built on top of the open-source Apache Hudi project. Led by Vinoth Chandar, a former Uber engineer who created the Hudi project, Onehouse has raised two rounds of funding in the last two years totaling $33M in funding from Greylock and Addition.

With this in mind, it appears Uber is a breeding ground for remarkably skilled entrepreneurs, leading me to anticipate sustained excellence from Ovais and his team. In the coming years, I could not be more excited to see how this team executes with this round of seed funding.

A Final Reflection

As we conclude our exploration into the ever-evolving realm of cloud computing, it's clear that the field is marked by rapid innovation and shifting paradigms. From the foundational impact of Amazon's S3 to the burgeoning ecosystem of new platforms like DigitalOcean, Vercel, and Fly.io, the cloud computing landscape is undergoing a transformation that speaks to the heart of modern development needs. These emerging trends underscore a broader narrative of adaptability, global optimization, and the quest for simplicity amidst complexity.

Though it is a multifaceted journey, each layer of the stack presents its own set of challenges and opportunities, that is constantly evolving to meet the demands of businesses and developers alike. The shift towards platforms offering more intuitive user experiences and flexible, globally distributed services serves to enhance the traditional cloud infrastructures that dominated the past decade.

In this context, the story of Tigris serves as a case study in addressing specific challenges within the cloud storage domain, yet the broader implications of our discussion extend well beyond a single solution or company.

The trends outlined throughout this series—from tackling vendor lock-in challenges to embracing hybrid cloud solutions and acknowledging the rise of alternative cloud providers—offer a roadmap for navigating the future of cloud computing.

Stay tuned for the next post where we venture further into the cloud and cover all things AI/ML Infra.

If you enjoyed this article and want to see more industry and company deep-dives, then be sure to hit that follow button so you can be notified every time an article goes live!

Blasting off...🚀

sources consulted:

"The History of Cloud Computing Explained." TechTarget. https://www.techtarget.com/whatis/feature/The-history-of-cloud-computing-explained.

Mcheick, Hamid, Fatma Obaid, and Haidar Safa. "Cloud Computing: Past, Current and Future." 2012.

Bugnion, Edouard, Scott Devine, Mendel Rosenblum, Jeremy Sugerman, and Edward Y. Wang. "Bringing Virtualization to the x86 Architecture with the Original VMware Workstation." ACM Transactions on Computer Systems 30, no. 4 (2012): 12:1-12:51.

Sandberg, R. "The Sun Network Filesystem: Design, Implementation and Experience." 2001.

"History of the Cloud." BCS - The Chartered Institute for IT. https://www.bcs.org/articles-opinion-and-research/history-of-the-cloud/.

"Cloud Infrastructure Part I: Data Centers." CloudInfrastructure. https://cloudinfrastructure.substack.com/p/cloud-infrastructure-part-i-data.

"Cloud Diagrams." Holori. https://holori.com/cloud-diagrams/.

"Breaking Down the Infrared Report." CloudInfrastructure. https://cloudinfrastructure.substack.com/p/breaking-down-the-infrared-report.

"Data Trends: Visualizing the Global Cloud Industry in 2023." Bessemer Venture Partners. https://www.bvp.com/atlas/data-trends-visualizing-the-global-cloud-industry-in-2023.

"Comparing AWS, Azure, GCP." DigitalOcean. https://www.digitalocean.com/resources/article/comparing-aws-azure-gcp.

"History of SaaS." BigCommerce. https://www.bigcommerce.co.uk/blog/history-of-saas/.