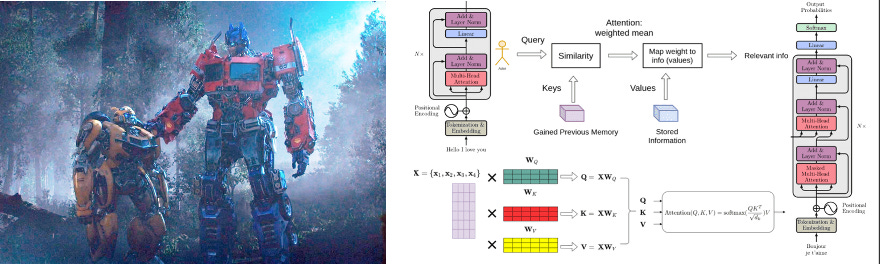

When you first think of transformers what comes to mind? Is it the iconic cartoon, a component of a telephone line, or something else? For me, it was my childhood memories of Optimus Prime and Bumblebee saving the world from Megatron.

In the world of technology, specifically artificial intelligence (AI), transformers form the infrastructure for some of the hottest companies in venture.

As we look at what is currently being funded and talked about, we are seeing common themes come up again and again: “generative AI”, “ChatGPT”, “OpenAI”, “AI-enabled.”

Sound familiar?

Unless you have been living under a rock, you know that these words have been used more than every investor would like to admit. In their Q1 earnings call, Google said the term “AI” 50 times, which was closely followed by Meta at 49 and Microsoft at 46. So what is this craze all about and why am I diagnosing every tech investor with a severe case of FOMO?

The buzz from an investor lens can be closely correlated with the announcement of ChatGPT in early November. Since then, every startup being funded seems to have some sort of AI component. From a technologist's perspective, I am highly optimistic about the many use cases that are being solved by generative AI. But many of the companies are just that, companies solving for a particular use case at the application level.

However, this is not an article about the current state of AI. There are more qualified people who have already gone ahead and done that analysis. Rather, I am here to argue that investors should be focusing on a different field of AI that is operating completely under the radar.

Before I introduce this emerging area, let us revisit our friend the transformer.

What is a transformer, and why should you care?

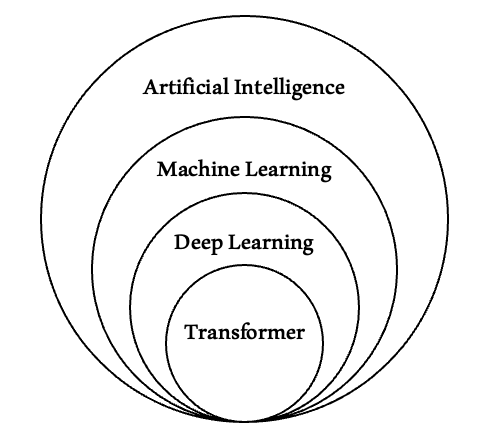

Simply, a transformer is a type of neural network architecture that was first pioneered by Google in a 2017 paper. This architecture wildly outperformed previous deep learning models and set the foundation for many of the companies driving the AI craze today. Transformers revolutionized the training process by eliminating the need for large, labeled datasets and the costs associated with this training. By mathematically identifying patterns, transformers can be trained on immense amounts of data and recognize patterns over time. Moreover, their parallel processing capabilities enable fast and more efficient model execution.

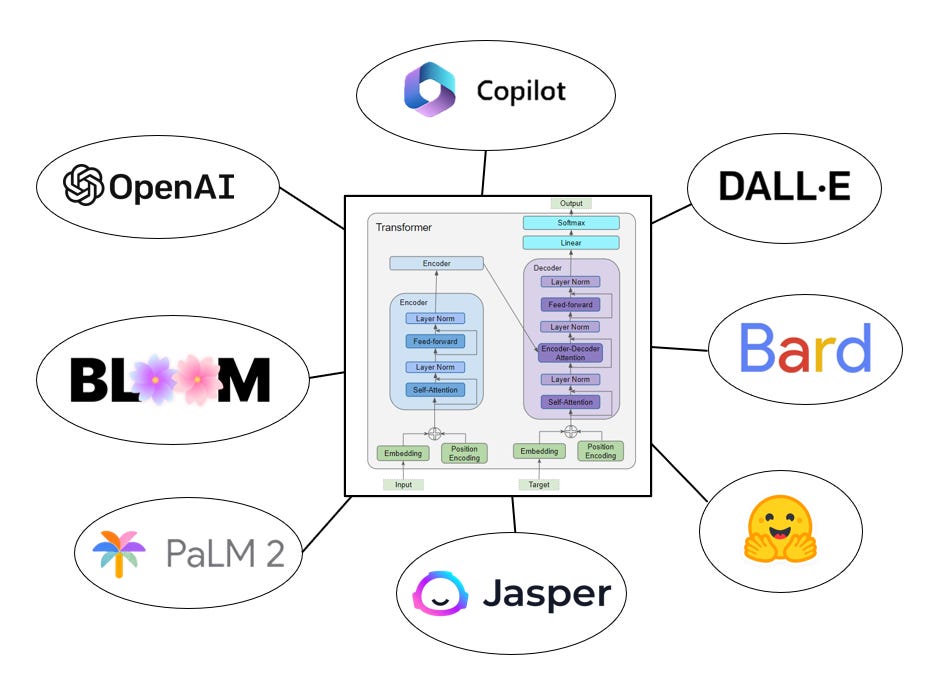

So what companies today use transformers?

Well, the short answer is just about all of them...

Let’s examine the architectures of some of the hottest models used in tech today: GPT / DALL-E (OpenAI), BERT / BARD / PaLM (Google), BLOOM (Hugging Face) and CoPilot (Microsoft). When examining each of these models under the hood, one thing is the same - transformers.

Think of transformers as an engine and each model as a different car company. They may all be cars, but each model has its own nuances/use cases that make it unique.

But there is one big drawback for all of these companies: they cannot interact with the outside world.

So what are investors missing and where is this AI craze going?

Action transformers are the future of AI, and I predict that they will become a lot more mainstream as the model architecture becomes more capable. With use cases affecting everyone from the average consumer to SMBs and large-scale enterprises, action transformers represent an absolutely enormous market opportunity.

So what is an action transformer, and why was this important enough to be my first SubStack post?

An action transformer is a type of neural network architecture that runs off of a large transformer model. There it is again...our friend the transformer. This groundbreaking advancement gives AI the ability to act on information that a user passes into the model. Users will be able to define a task, and the AI will go and carry out the task in real-time. Even more impressive is this model's ability to carry out multiple tasks simultaneously across a wide variety of software applications.

Think about any work that you do on a computer. How often are you staying on a single application or website throughout your entire session?

That answer is never.

Now what if there was an AI assistant that could, in real-time, complete some of your most mundane tasks? Maybe you are a SDR that needs to manually add a lead to Salesforce, or a data scientist that needs to run some queries on a large data set. Action transformers allow these tasks to be automated with high degrees of precision that drastically outpace even the most efficient humans. Better yet, you as the user can provide feedback to ensure that the process is done to your liking. Though the use cases so far have been narrowly defined to business, this technology has the potential to revolutionize every industry.

Think about it this way...every task done on a computer by a human has the potential to be completely automated by AI. This statistic alone helps illustrate how tremendous this market opportunity is. And yet, everyone wants to talk about how wonderful generative AI is at solving the world’s problems.

And while generative AI truly is great, we are sitting right now in what investors like to call a bubble. And as we all learn as young children, bubbles eventually pop. With many of the generative AI companies operating in the application layer, there are only so many use cases and so many companies that will escape the bubble. History has shown us this time and time again, and yet investors continually fall into this FOMO trap.

To better understand where AI could go, let’s look at where the last platform shift did go. Such a methodology has been echoed by one of the greatest minds of the Twentieth Century - Carl Sagan.

And what was the last major platform shift?

Cloud computing.

Tomasz Tunguz, General Partner at Theory Ventures, did an incredible job breaking down the infrastructure vs application layer of the cloud computing market. If you look at the three largest cloud providers, being sure only to include the cloud segment of the businesses, the combined market capitalization is in the ballpark of $2.1 Trillion. Now, if you take a step back and look at the 100 largest cloud companies in the application layer you arrive at just about the same value.

So now let’s use this data to help guide my theory for AI.

Luckily, in the highly complex world of AI, we again have the very same basic structure: the application and the infrastructure layers. Examining action transformers makes it very clear to see that this tech would form the infrastructure for the next wave of AI-native companies.

With this in mind, capturing the next AWS of foundation models will represent a highly lucrative investment opportunity for investors. Judging by the sheer size of the market for action transformer models, I believe investors should be hyper-focused on trying to identify the next infrastructure layer incumbent. The upside potential for such companies is easily 10x those of companies in the application layer.

What are the limitations of action transformers?

Though this technology is very exciting, there is a reason I referred to it as an emerging technology. With these models requiring a completely new way of training models, they are currently only effective for tasks that require the same process over and over again. Though this can be helpful for some use cases, the real power will be a result of more advanced functionality. Also with slow computing times, both the modeling side and the software side have a long way to go for today’s inpatient consumers.

So when is this going to happen and what is limiting these models' functionality?

The short answer is years. Although action transformers are highly reactive to feedback, think about how many tasks they model will have to be nearly perfect at navigating. Also, this is another limiting factor that affects just about every AI company in existence - a shortage of talent. Academic and training programs just can’t keep up with the pace of innovation and new discoveries with AI. With new models and breakthroughs happening every week, this buzz has also penetrated deep into academia. Also with the incumbents holding much of the AI talent, it is very difficult for startups to poach the most talented researchers/engineers. Take Google’s acquisition of DeepMind in 2014 as an example. Although a bit outdated, it very much illustrates the point that AI talent is expensive. With the deal being valued at $500 million for a team of 50 people, Google valued each engineer at a whopping $10 million dollars. So with this in mind, much of the existing talent will be brought up to speed on AI-native development through upskilling platforms...which is another great investment opportunity ;)

As with all technological shifts, it will take time for the talent pool to catch up and adapt to the ever-changing landscape. Combine this with the fact that it takes an immense amount of time to train models, this reality is still many years away. So don’t worry, your job will not be replaced by AI for the foreseeable future.

Is anyone building in the action transformer space?

San Francisco-based Adept.ai is where the whole idea of an action transformer was born. Their model, dubbed ACT-1 (not the standardized test) is looking to solve the current shortfalls of generative models. The company raised $350 million of Series B venture funding in a deal led by General Catalyst and Spark Capital in February putting the company's pre-money valuation at $650 million. Adept has deep expertise in the space with the founders being authors of the Google paper mentioned above and the team being led by a former VP of Engineering at OpenAI. As for future direction, the company is making progress in training its ACT-1 model that learns from product documentation and local learning to execute tasks that current models cannot do without connections to external applications. The goal is to one day have a model that is able to seamlessly navigate every software tool, API, and web application that exists.

Conclusion

With leading VCs seeing AI as the next platform shift, some even comparing it to mobile, it is hard to not want to jump on the hype train. Frankly, I do agree that AI is the future. As investors, it is our job to distinguish the winners from the rest of the noise - a job that is difficult in itself.

Although companies in the infrastructure layer are going to have large capital requirements, identifying the winners in this space will represent returns that are unimaginable. Adept may be the first, but I would be shocked if some of the incumbents are not already thinking about this next generation of AI. With the current macro backdrop and the influx of AI startups, one thing can be guaranteed - the companies that get through this bubble could very much form the basis for the next generation of category-defining companies. Luckily one thing is certain...time reveals all truths.

If you enjoyed this article and want to see more industry and company deep-dives, then be sure to hit that follow button so you can be notified every time an article goes live!

Blasting off...🚀